UC researchers use ultrasound and games for speech therapy

Converting ultrasound images into games will help children visualize their speech patterns

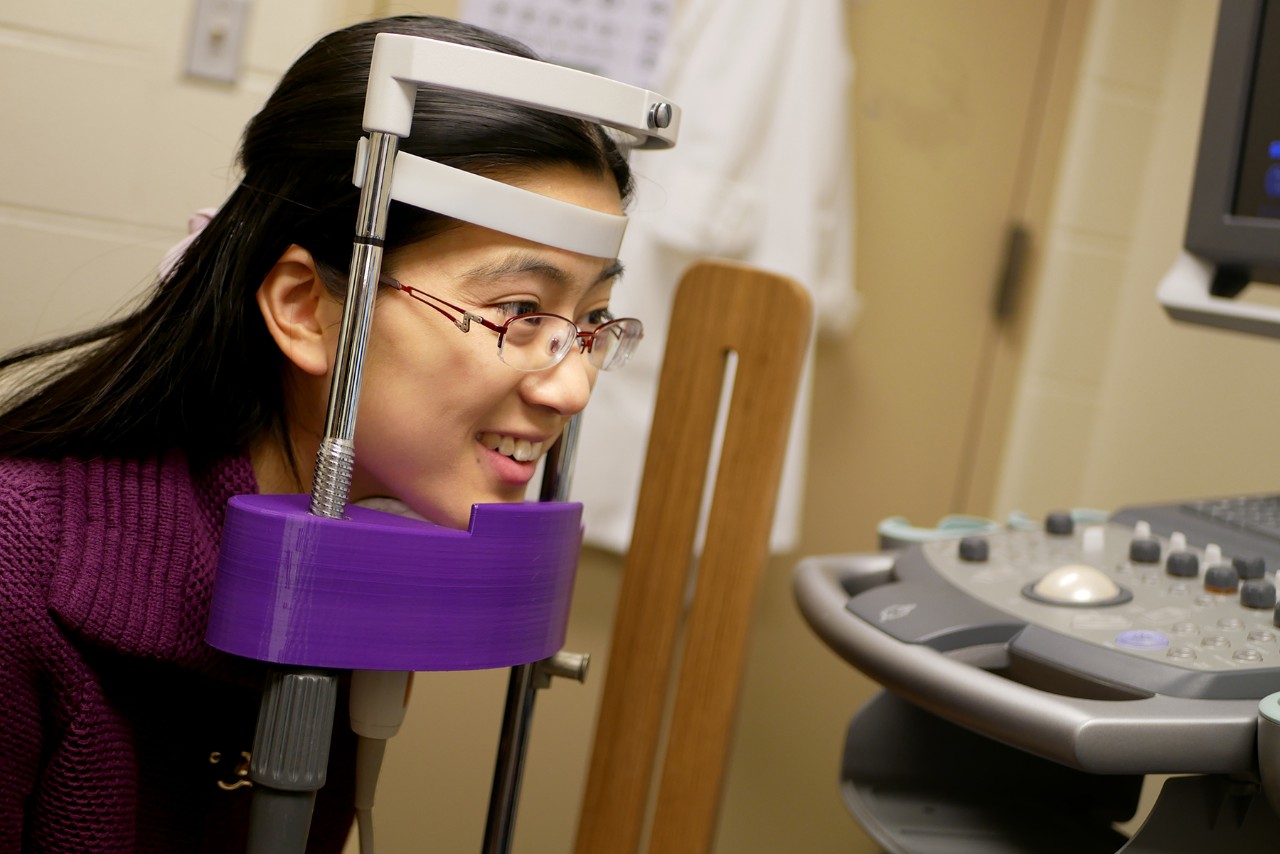

An ultrasound machine displays the shape of a person's tongue for tongue tracking purposes. Photo/Corrie Stookey/CEAS Marketing

Up to 2 percent of people who reach adulthood have speech disorders that affect their ability to pronounce certain sounds. Conventional speech therapy involves clinicians and verbal feedback, but sometimes children are not responsive to this. An effective alternative to conventional therapy is visual feedback therapy, such as through ultrasound imaging.

Traditional ultrasound imaging for speech therapy offers real-time views of the tongue during speech that teaches children to produce correct tongue shapes for difficult sounds. These images, however, are often difficult to understand for both the clinician and child, and progress is hard to measure.

Researchers at the University of Cincinnati are finding ways to use ultrasound biofeedback therapy in a simplified, real-time system. The team was recently awarded a five-year grant from UC and the National Institutes of Health called “Simplified Ultrasound Biofeedback for Speech Remediation.”

“UC has children enrolled in ultrasound therapy, but this therapy gives you pretty complex images,” says Hannah Woeste (biomedical engineering ’19), who is using this project for her capstone. Woeste is working alongside fellow undergraduate student Jack Masterson (biomedical engineering ’21) and biomedical engineering graduate student Sarah Li.

On top of simplifying these images, the team hopes to transform children’s attention to an external outlet. Rather than children spending so much time focusing on what they’re doing, they will be able to visualize their speech patterns in a simplified game.

“It’s like that feeling when you really try to think about doing something, and you just can’t,” says Woeste. “You’re trying too hard, and that’s the sort of problem we are looking to solve.”

The project is another example of UC's commitment to research as part of its strategic direction called Next Lives Here.

An ultrasound machine displays the image of a tongue as UC student Jack Masterson works with computer programming software MATLAB. Photo/Corrie Stookey/CEAS Marketing

Tongue tracking

A major part of this research is tongue tracking. Patterns of tongue motion during speech production can provide insights for speech therapy. People with normal speech, for example, have different tongue patterns than those with speech disorders.

By tracking tongue movements through ultrasound imaging, researchers and speech therapists can pinpoint exactly where these differences are.

“We’re looking for a way to quantify the differences in the tongue between those people with speech disorders and those people with normal speech,” says Masterson, who is making a program in MATLAB to track tongue movements. After analyzing all the data, the team can start identifying the differences.

With 300 identifiable displacement values for those with speech disorders, this is no easy task. Add in the natural variation between people and it becomes even more difficult.

Head stabilizer for children using ultrasound imaging for speech therapy. Photo/Corrie Stookey/CEAS Marketing

“You won’t just have a single pattern, because everyone has their own way of speaking,” says Woeste. “When you say normal and disordered, you automatically think there’s a large variation, but there really is not.”

Tongue tracking from ultrasound data has existed for some time but not with the speed and accuracy the team is aiming for. Currently, the can track and display displacements of the three major tongue parts (root, dorsum, and blade) at 20 frames per second, so patients can observe a simplified display of their tongue motion on a screen as they talk.

The next step is to translate this data into a game, with the goal to drive tongue movements in the right direction.

Imagine a Mario video game, explains Woeste, where instead of using a controller to make Mario jump over blocks and kick Koopa shells, you would use the movements of your tongue. Saying certain sounds like “r” or “l” would dictate the movements of your character. This simplified and interactive feedback could help children progress in their own speech therapy.

Research for a cause

All three students are interested in a career in clinical research, but for Li, the graduate student on the team, working with speech therapy is more personal. Growing up with a parents from Taiwan and Hong Kong, Li was exposed to multiple languages as a child.

“While my mother picked up the pronunciation for the different languages well, my father mispronounces many words in Mandarin and in English,” says Li.

By the time she started school, she was unable to pronounce the “r” sound in words like “girl” and required speech therapy. It wasn’t until she started taking Mandarin in middle school that she realized she had been mispronouncing many words in that language as well.

“This research project has made me realize that I never really thought about how I was learning pronunciation,” says Li. “The idea of using a more quantitative method for learning is therefore very interesting to me. Given how often my father mispronounces words, I can also see how important correct speech pronunciation is in communication.”

UC graduate student Sarah Li and undergraduate student Jack Masterson stand beside the lab's ultrasound machine. Photo/Corrie Stookey/CEAS Marketing

The team is still in phase one of their research. UC Professor of Biomedical Engineering Douglas Mast, Ph.D., is the co-principle investigator of the grant with UC Professor of Communication Sciences and Disorders Suzanne Boyce, Ph.D., and UC Professor of Psychology Michael Riley, Ph.D. Mast mentors Woeste, Masterson and Li on their various projects.

Though the NIH grant started in June of 2018, research into this project has been going on since 2015. It started as a Strategic Collaborative Grant from the UC Office of Research, awarded to Boyce, Mast and Riley. Their collaborators now also include Renee Seward, faculty in the College of Design, Art, Architecture and Planning (DAAP).

This research project has made me realize that I never really thought about how I was learning pronunciation.

Sarah Li UC biomedical engineering graduate student

Maintaining communication between all these departments challenges the students, but it also highlights the cross-disciplinary nature of engineering and the positive innovations that can come from it.

As the team members move forward in the research, they hope to put a spin on conventional speech therapy. Blending complicated ultrasound imaging and tongue tracking with a simplified gaming interface may be the key in promoting correct speech patterns for children everywhere.

Featured image at top: UC graduate student Sarah Li demonstrates ultrasound imaging for speech therapy by using a head stablizer. Photo/Corrie Stookey/CEAS Marketing

Find your path

Interested in encouraging others as you find your path to academic success? If so, apply to UC as an undergrad or graduate inspirational Bearcat. As part of UC's Next Lives Here strategic direction, UC's students makes positive and real-world impact.

Related Stories

From research to resume: Grad Career Week prepares students for career paths

February 20, 2026

Graduate students at the University of Cincinnati will explore how their academic and creative work translates into professional success during Grad Career Week, March 2–6, a week-long series of workshops, networking opportunities, and skill-building sessions hosted by the Graduate College.

Before the medals: The science behind training for freezing mountain air

February 19, 2026

From freezing temperatures to thin mountain air, University of Cincinnati exercise physiologist Christopher Kotarsky, PhD, explained how cold and altitude impact Olympic performance in a recent WLWT-TV/Ch. 5 news report.

Discovery Amplified expands research, teaching support across A&S

February 19, 2026

The College of Arts & Sciences is investing in a bold new vision for research, teaching and creative activity through Discovery Amplified. This initiative was launched through the Dean’s Office in August 2024, and is expanding its role as a central hub for scholarly activity and research support within the Arts & Sciences (A&S) community. Designed to serve faculty, students, and staff, the initiative aims to strengthen research productivity, foster collaboration, and enhance teaching innovation. Discovery Amplified was created to help scholars define and pursue academic goals while increasing the reach and impact of A&S research and training programs locally and globally. The unit provides tailored guidance, connects collaborators, and supports strategic partnerships that promote innovation across disciplines.